Table of Contents

As my disks tend to be quite messy with time, I had to devise ways to reclaim space, which means both spotting big directories and files, and to deal with the thousands of duplicates you can't delete one per one.

Here's my toolbox for cleaning my disks on linux in 3 major steps:

- Confirm the problem with dysk

- Spot and delete big files and directories with broot

- Delete thousands of duplicates with backdown

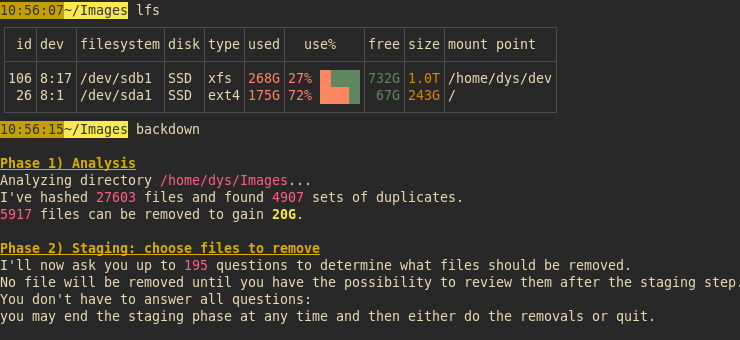

Confirm the problem with dysk

A popular software to get an idea of what space is left in your filesystems is df.

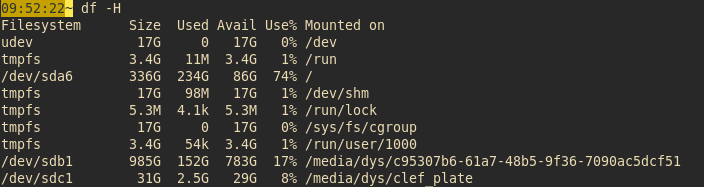

You get the best output with df -H.

Here's an example:

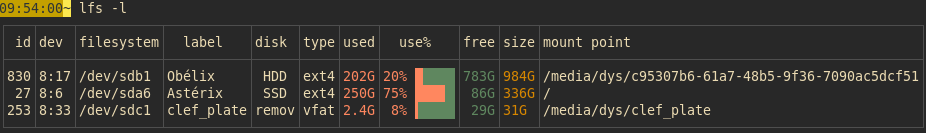

Personally, I don't find it readable enough, so I made dysk (previously known as lfs).

Now I instantly recognize Asterix, my fast and small disk, and Obelix, the big and slow one, and there's no noise.

And I immediately see whether there's a lot of space remaining on each of them.

Spot and delete big files and directories with broot

The second tool is broot, in "whale spotting" mode.

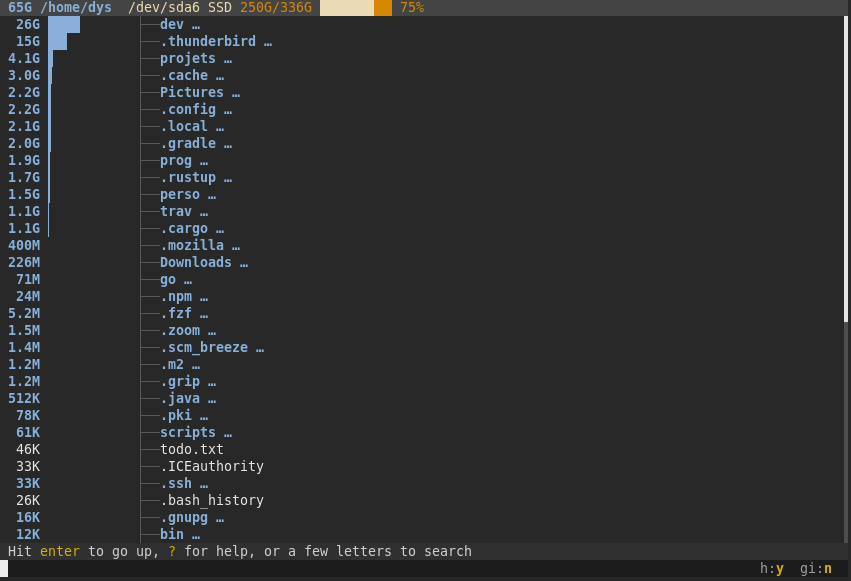

Let's launch it with br -w.

Files and directories are sorted by total size:

Top of the screen, you recognize the occupation bar for the current disk.

You may move to a directory and enter it with the Enter key.

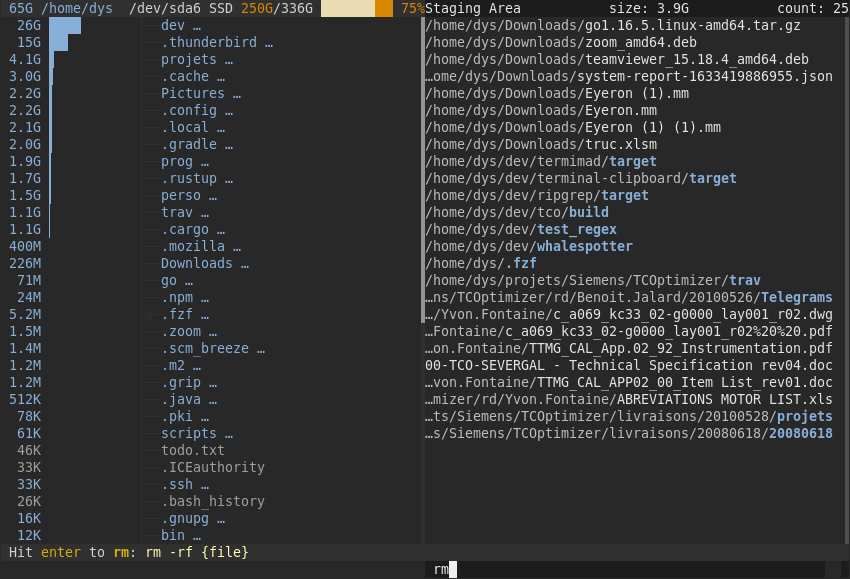

You may remove a file with the :rm command (see the introduction to broot) but I personally prefer to stage files then remove them all in one go.

Staging a file is done with ctrlg, and it sends it to a list to the right, with total size displayed on top.

When you've staged all the files you want to remove, you move to the staging panel with ctrl→ then type :rm:

A hit on enter and the files are gone.

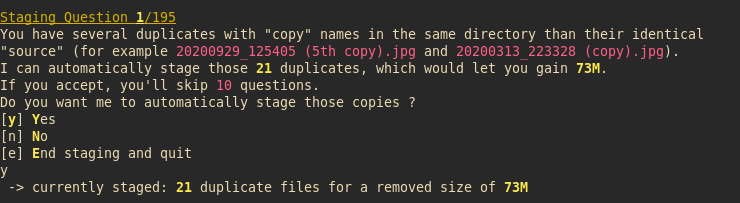

Delete thousands of duplicates with backdown

The third tool is for when there's no file or directory that you can obviously remove, but you know you have a lot of duplicates but you don't know exactly which file is saved, and where, and it's so messy you'd spend hours just removing a few files.

A typical example for me is the number of files I copy in specific folders, backup, etc.

backdown finds all the duplicates in a chosen directory, but that's only the start. The smart part is asking you relevant questions to decide which files to keep and which files to delete (while always guaranteeing to keep at least one item in any set of duplicates).

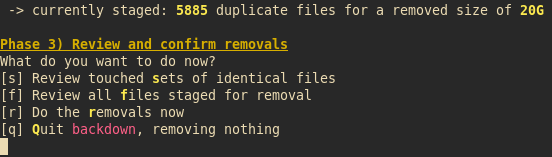

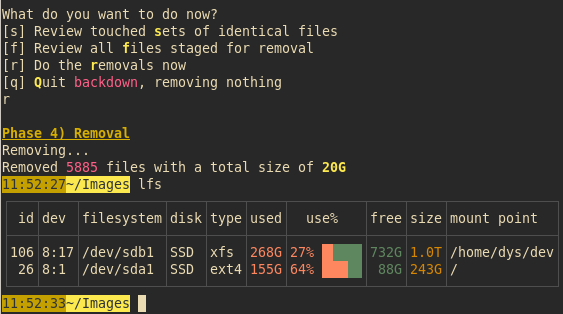

Here's a (real) recent session, starting with dysk telling me I have only 67GB free on one of my disks, then the launch of backdown in my "Images" directory:

There's a lot here. Let's have a look.

First, backdown does a short analysis... or a long one, depending on your disk speed.

After that, backdown knows there are 4907 sets of identical files and that 5917 of them could be removed to gain 20GB.

Yes, that's a lot. Maybe you're cleaner than me and you think you don't have many duplicates. Maybe you're right. Maybe you'd be surprised.

Backdown explains how the session will go, and that it may ask up to 195 questions. Questions depend on the previous one so the 195 we see here is a maximum, not the real number. Backdown asks first questions which help remove either a lot of files or a lot of potential questions.

Now the questions.

The first question lets you deal in one hit on the enter key with all files whose name reveal they're basic copies when the original is in the same directory.

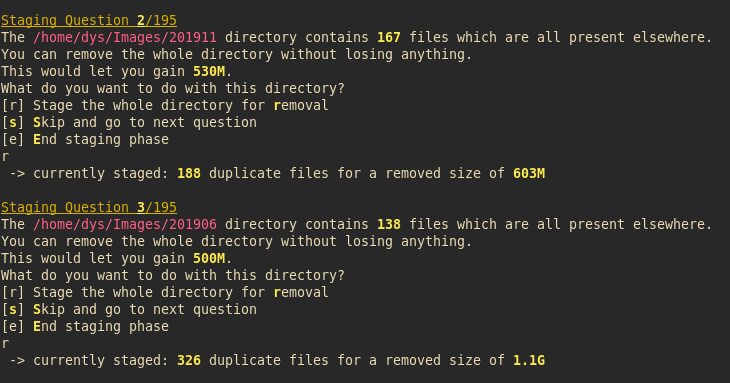

The second and third question are examples of cases where a directory is only made of duplicates. It would be hard to check this manually but backdown did it for you.

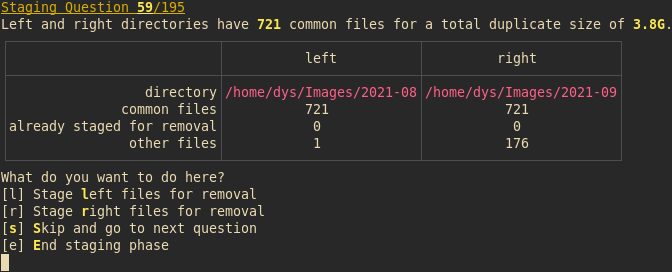

There are several other types of questions. Here's the most frequent one:

You don't have to answer all questions. You may stop when they don't make you gain a lot (and maybe you'll launch backdown again another day).

When you hit e, you're shown this choice:

Let's see what we reclaimed:

88GB free instead of 67GB, by just removing duplicates.